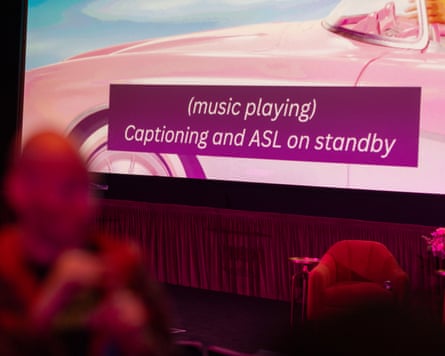

Is artificial intelligence going to destroy the SDH [subtitles for the deaf and hard of hearing] industry? It’s a valid question because, while SDH is the default subtitle format on most platforms, the humans behind it – as with all creative industries – are being increasingly devalued in the age of AI. “SDH is an art, and people in the industry have no idea. They think it’s just a transcription,” says Max Deryagin, chair of Subtle, a non-profit association of freelance subtitlers and translators.

The thinking is that AI should simplify the process of creating subtitles, but that is way off the mark, says Subtle committee member Meredith Cannella. “There’s an assumption that we now have to do less work because of AI tools. But I’ve been doing this now for about 14-15 years, and there hasn’t been much of a difference in how long it takes me to complete projects over the last five or six years.”

“Auto transcription is the only place where I have seen some positive advancements,” Cannella adds, “but even then that doesn’t affect the total amount of time that it takes to produce an SDH file.” So many corrections are needed that there’s no net benefit compared to using older software.

Moreover the quality of AI-generated SDH is so poor that much work is needed to bring them up to standard – but because human subtitlers are often assigned tasks as “quality control”, payment is minimal. Subtle notes that many of its members are now unable to make a living wage.

“SDH rates are not great to start with, but now they’re so low that it’s not even worth taking the work,” says Rachel Jones, audiovisual translator and member of the Subtle committee. “It really undermines the role that we play.”

And it’s a vital role. Teri Devine, associate director of inclusion at the Royal National Institute for Deaf People, says: “For people who are deaf or have hearing loss, subtitles are an essential service – allowing them to enjoy film and TV with loved ones and stay connected to popular culture.”

The deaf and hard-of-hearing community is not monolithic, which means subtitlers are juggling a variety of needs in SDH creation. Jones says: “Some people might say that having the name of a song subtitled is completely useless, because it tells them nothing. But others might have a memory of how the song went, and they’ll be able to connect to it through the song’s title. Some people think that emotional cues get in the way and tell them how to feel rather than being objective. Others want them.”

Subtitling involves much creative and emotionally driven decision-making, two things that AI does not currently have the capacity for. When Jones first watches a show, she writes down how the sounds make her feel, then works out how to transfer her reactions into words. Next, she determines which sounds need to be subtitled and which are excessive. “You can’t overwhelm the viewer,” she says. It is a delicate balance. “You don’t want to describe something that would be clear to the audience,” Cannella says, “and sometimes, what’s going on on the screen is much more important than the audio. The gentle music might not matter!”

AI is unable to decide which sounds are important. “Right now, it’s not even close,” Deryagin says. He also stresses the importance of the broader context of a film, rather than looking at isolated images or scenes. In Blow Out (1981), for example, a mysterious sound is heard. Later, that sound is heard again – and, for hearing viewers, reveals a major plot point. “SDH must instantly connect those two things, but also not say too much in the first instance, because viewers have to wonder what’s going on,” he says. “The same sound can mean a million different things. As humans, we interpret what it means and how it’s supposed to feel.”

“You can’t just give an algorithm a soundtrack and say, ‘here are the sounds, figure it out’. Even if you give it metadata, it can’t get anywhere near the level of professional work. I’ve done my experiments!”

Netflix shared a glimpse of its SDH processes after subtitles from Stranger Things, such as “[Eleven pants]” or “[Tentacles squelching wetly]” went viral, via an interview with its subtitlers. The company declined to comment further on its use of AI in its subtitling. The BBC told the Guardian: “There is no use of AI for subtitles on TV,” though much of its work is outsourced to Red Bee Media, which last year published a statement on its use of AI in SDH creation for Australian broadcaster Network 10.

Jones says that linguists and subtitlers are not necessarily against AI – but at the moment, it’s making practitioners’ lives harder rather than easier. “In every industry, AI is being used to replace all the creative things that bring us joy instead of the boring, tedious tasks we hate doing,” she says.

3 months ago

71

3 months ago

71