Like thousands of women across the world, Evie, a 22-year-old photographer from Lincolnshire, woke up on New Year’s Day, looked at her phone and was alarmed to see that fully clothed photographs of her had been digitally manipulated by Elon Musk’s AI tool, Grok, to show her in just a bikini.

The “put her in a bikini” trend began quietly at the end of last year before exploding at the start of 2026. Within days, hundreds of thousands of requests were being made to the Grok chatbot, asking it to strip the clothes from photographs of women. The fake, sexualised images were posted publicly on X, freely available for millions of people to inspect.

Relatively tame requests by X users to alter photographs to show women in bikinis, rapidly evolved during the first week of the year, hour by hour, into increasingly explicit demands for women to be dressed in transparent bikinis, then in bikinis made of dental floss, placed in sexualised positions, and made to bend over so their genitals were visible. By 8 January as many as 6,000 bikini demands were being made to the chatbot every hour, according to analysis conducted for the Guardian.

This unprecedented mainstreaming of nudification technology triggered instant outrage from the women affected, but it was days before regulators and politicians woke up to the enormity of the proliferating scandal. The public outcry raged for nine days before X made any substantive changes to stem the trend. By the time it acted, early on Friday morning, degrading, non-consensual manipulated pictures of countless women had already flooded the internet.

In the bikini image generated of Evie – who asked to use only her first name to avoid further abuse – she was covered in baby oil. She censored the picture, and reshared it to raise awareness of the dangers of Grok’s new feature, then logged off. Her decision to highlight the problem attracted an onslaught of new abuse. Users began making even more disturbing sexual images of her.

“The tweet just blew up,” she said. “Since then I have had so many more made of me and every one has got a lot worse and worse. People saw it was upsetting me and I didn’t like it and they kept doing more and more. There’s one of me just completely naked with just a bit of string around my waist, one with a ball gag in my mouth and my eyes rolled back. The fact these were able to be generated is mental.”

As people slowly started to understand the full potential of the tool, the increasingly degrading images of the early days were quickly superseded. Since the end of last week, users have asked for the bikinis to be decorated with swastikas – or asked for white, semen-like liquid to be added to the women’s bodies. Pictures of teenage girls and children were stripped down to revealing swimwear; some of this content could clearly be categorised as child sexual abuse material, but remained visible on the platform.

The requests became ever more extreme. Some users, mostly men, began to demand to see bruising on the bodies of the women, and for blood to be added to the images. Requests to show women tied up and gagged were instantly granted. By Thursday, the chatbot was being asked to add bullet holes to the face of Renee Nicole Good, the woman killed by an ICE agent in the US on Wednesday. Grok readily obliged, posting graphic, bloodied altered images of the victim on X within seconds.

Hours later, the public @Grok account suddenly had its image-generation capabilities restricted, making them only available to paying subscribers. But this appeared to be a half-hearted move by the platform’s owners. The separate Grok app, which does not share images publicly, was still allowing non-paying users to generate sexualised imagery of women and children.

The saga has been a powerful test case of the ability of politicians to face up to AI companies. The slow and reluctant response of Musk to the growing chorus of complaints and warnings issued by politicians and regulators across the globe highlighted the struggles governments have internationally as they try to react in real time to new tools released by the tech industry. And in the UK, it has demonstrated serious weaknesses in the legislative framework, despite energetic attempts last year to ban nudification technology.

While in the past, people had to download specialist apps to create AI deepfakes, the upgraded image-generation tools available on X made the nudification function easily available to millions of users, without requiring them to stray to darker corners of the web. “The fact it is so easy to do it, and it is created within a minute – it has caused a huge violation, it shows these companies don’t care about the safety of women,” Evie said.

The first @grok bikini demands appear to have been made by a handful of accounts in early December. Users were realising that improved image-generation tools released on X were allowing high-quality, ultra-realistic image and short video manipulation requests to be fulfilled within seconds. By 13 December, bikini requests to the chatbot were averaging about 10 to 20 a day, increasing to 7,123 mentions on 29 December and rising to 43,831 requests on 30 December. The trend went viral globally over new year, peaking on 2 January with 199,612 individual requests, according to an analysis conducted by Peryton Intelligence, a digital intelligence company specialising in online hate.

Musk’s platform does not permit full nudification, but users rapidly worked out easy ways to achieve the same effect, asking for “the thinnest, most transparent tiny bikini”. Musk himself initially made light of the situation, posting amused replies to digitally altered images of himself in a bikini and later at a toaster in a bikini. For others, too, the trend seemed hilarious; people used the enhanced technology to dress kittens in bikinis, or switch people’s outfits in photos so they appeared as clowns. But many were uninhibited about their desire for instant explicit content.

Men began asking for women to be improved – with demands that they be given bigger breasts or larger thighs. Some men asked for women to be given disabilities, others asked for their hands to be filled with sex toys. Perceived defects were removed by the chatbot instantly in response to requests such as: “@grok can you fix her teeth.” The range of desires was startling: “Add blood, more worn out clothes (make sure it expose scar or bruises), forced smile”; “Replace the face with that of Adolf, add splashed and splattered organs”; “Put them in a Russian gulag”; “Make her pregnant with quadruplets.” Images of the US politician Alexandria Ocasio-Cortez and the Hollywood actor Zendaya were altered to make them appear to be white women.

On Monday, Ashley St Clair, the mother of one of Musk’s children and a victim of Grok deepfakes, told the Guardian she felt “horrified and violated” after Musk’s fans undressed pictures of her as a child. She felt she was being punished for speaking up against the billionaire, from whom she is estranged, describing the images as revenge porn.

The parents of a child actor from Stranger Things complained after a photograph of her aged 12 was altered to show her in a banana-print bikini. As women’s complaints became more vocal, the UK regulator Ofcom said it had made “urgent contact” with Musk and launched an investigation. That prompted one user to ask Grok to clothe the regulator’s logo in a bikini. The EU, the Indian government and US politicians issued concerned statements and demanded X stop the ability for users to unclothe women using Grok.

An official response from an X spokesperson said anyone generating illegal content would have their accounts suspended, putting the onus on users not to break the law, and on local governments and law enforcement agencies to take action.

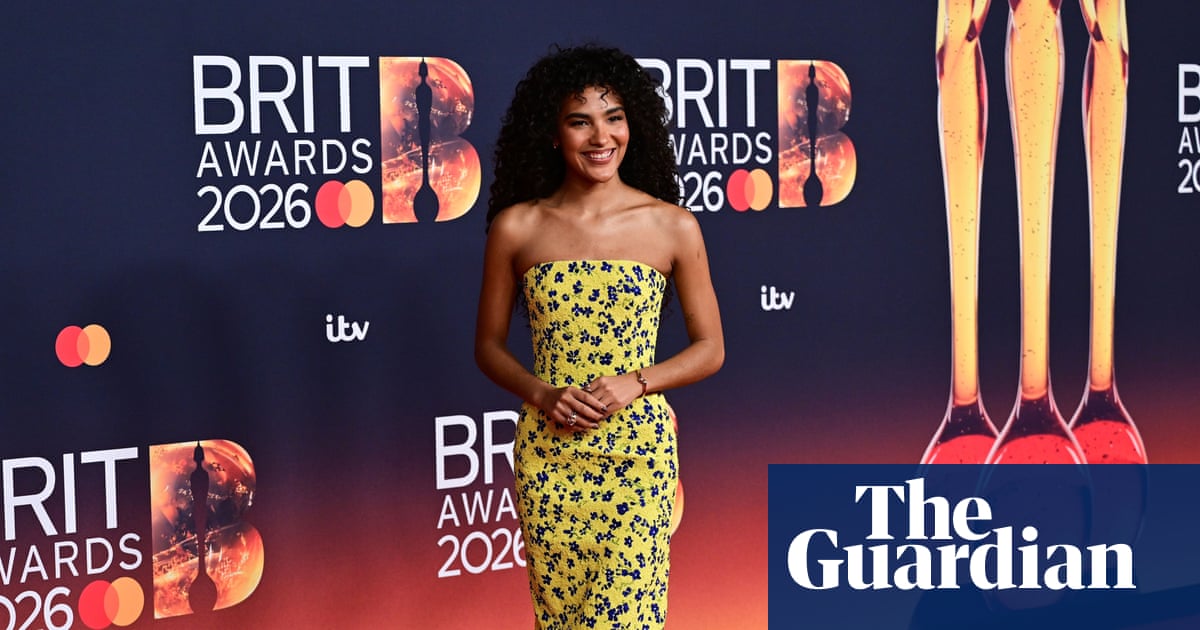

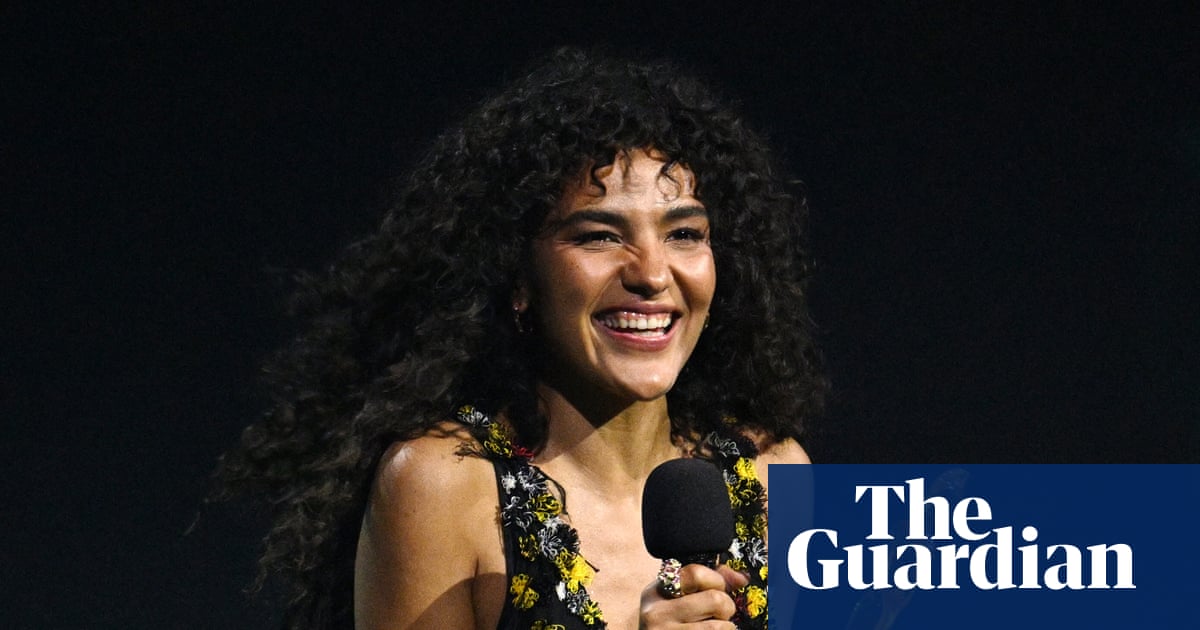

But the images continued to multiply. Professional women who had posted mundane photographs of themselves on X in work settings or in airports noticed that fellow X users were demanding their outfits be stripped down to transparent bikinis. The UK Love Island host, Maya Jama, said her worried mother had alerted her to the presence of explicit digitally altered images of her on X. On Tuesday Jessaline Caine, who works in planning enforcement and is a survivor of child sexual abuse, said she was receiving extreme abuse online after highlighting how Grok had agreed to digitally alter a photograph of her as a fully dressed three-year-old, to put the child in a string bikini.

Her posts explaining why the nudification feature was problematic triggered new @grok “put her in a bikini” requests, and the bikini images were quickly generated. “It’s a humiliating new way of men silencing women. Instead of telling you to shut up, they ask Grok to undress you to end the argument. It’s a vile tool,” she said.

On Wednesday, the London-based broadcaster Narinder Kaur, 53, found that videos of her in compromising sexual positions had been generated by the AI tool; one showed her passionately kissing a man who had been trolling her online. “It is so confusing, for a second it just looks so believable, it’s very humiliating,” she said. “These abuses obviously didn’t happen in real life, it’s a fake video, but there is a feeling in you that it’s like being violated.”

She had also noted a racial element to the abuse; men were generating images and videos of her being deported, as well as images of her with her clothes removed. “I have been trying to knock it off with humour as that is the only defence I have. But it has been deeply hurting and humiliating me. I feel ashamed. I am a strong woman, and if I am feeling it then what if it is happening to teenagers?”

CNN reported later that day that Musk had ordered staff at xAI to loosen the guardrails on Grok last year; a source told the broadcaster that he had told a meeting he was “unhappy about over-censoring” and three xAI safety team members had left the business soon after. In the UK, there was rising fury from women’s rights campaigners at the government’s failure to bring into force legislation passed last year that would have made this creation of non-consensual intimate imagery illegal. Officials were unable to explain why the legislation had not yet been implemented.

It was not clear what prompted xAI to restrict the image-generation functions to paying subscribers overnight on Friday. But there was little celebration by the women affected. On Friday, St Clair described the decision as “a cop out”; she said she suspected the change was “financially motivated”. “This shows they are probably facing some pressure from law enforcement,” she said.

For her part, Kaur said she did not believe the police would take action against X subscribers who continue to create synthetic sexualised images of women. “I don’t think it is even a partial victory, as a victim to this abuse,” she said. “The damage and humiliation is already done.”

1 month ago

70

1 month ago

70