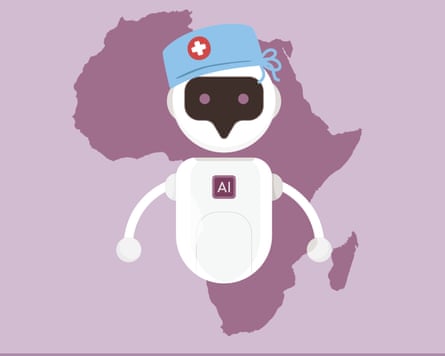

When patients telephone Butabika hospital in Kampala, Uganda, seeking help with mental health problems, they are themselves assisting future patients by helping to create a therapy chatbot.

Calls to the clinic helpline are being used to train an AI algorithm that researchers hope will eventually power a chatbot offering therapy in local African languages.

One person in 10 in Africa struggles with mental health issues, but the continent has a severe shortage of mental health workers, and stigma is a huge barrier to care in many places. AI could help solve those problems wherever resources are scarce, experts believe.

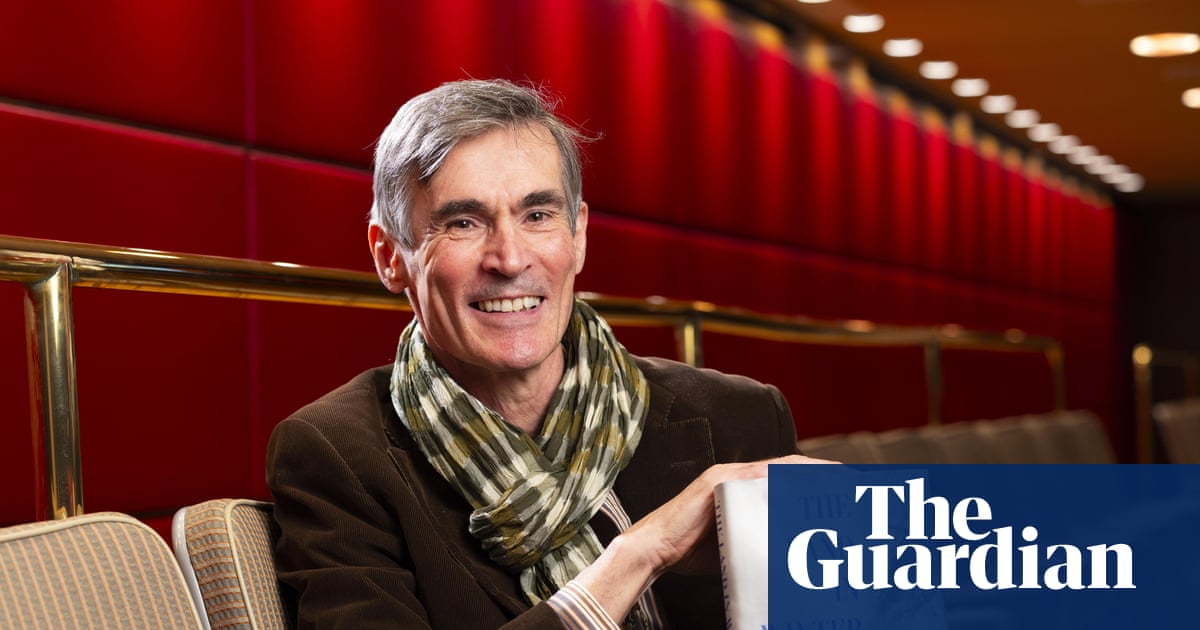

Prof Joyce Nakatumba-Nabende is scientific head of the Makerere AI Lab at Makerere University. Her team is working with Butabika hospital and Mirembe hospital, in Dodoma in neighbouring Tanzania.

Some callers just need factual information on opening times or staff availability, but others talk about feeling suicidal or reveal other red flags about their mental state.

“Someone probably won’t say ‘suicidal’ as a word, or they will not say ‘depression’ as a word, because some of these words don’t even exist in our local languages,” says Nakatumba-Nabende.

After removing patient-identifying information from call recordings, Nakatumba-Nabende’s team uses AI to comb through them and determine how people speaking in Swahili or Luganda – or another of Uganda’s dozens of languages – might describe particular mental health disorders such as depression or psychosis.

In time, recorded calls could be run through the AI model, which would establish that “based on this conversation and the keywords, maybe there’s a tendency for depression, there’s a tendency for suicide [and so] can we escalate the call or call back the patient for follow up”, Nakatumba-Nabende says.

Current chatbots tend not to understand the context of how care is delivered or what is available in Uganda, and are available only in English, she says. The end goal is to “provide mental health care and services down to the patient”, and identify early when people need the more specialised care offered by psychiatrists.

The service could even be delivered over SMS messaging for people who don’t have a smartphone or internet access, Nakatumba-Nabende says.

The advantages of a chatbot are numerous, she says. “When you automate, it’s faster. You can easily provide more services to people, and you can get a result faster than if you were to train someone to do a medicine degree and then specialise in psychiatry and then do the internship and the training.”

Scale and scope are also important: an AI tool is easily accessible any time. And, Nakatumba-Nabende says, people are reluctant to be seen seeking mental health care in clinics because of stigma. A digital intervention bypasses that.

She hopes the project will mean the existing workforce can “provide care to more people” and “reduce the burden of mental health disease in the country”.

Miranda Wolpert, director of mental health for the Wellcome Trust, which is funding a variety of projects looking at AI for mental health globally, says technology offers promise in diagnosis. “We are very, at the moment, reliant on people filling in, in effect, paper and pencil questionnaires, and it may be that AI can help us think more effectively about how we can identify someone struggling,” she says.

Technology-facilitated treatments might also look very different to the traditional mental health options of either talking therapy or medication, Wolpert says, citing Swedish research on how playing Tetris could alleviate PTSD symptoms.

Regulators are, however, still grappling with the implications of greater use of AI in healthcare. For example, the South African Health Products Regulatory Authority (SAHPRA) and health NGO Path are using funding from Wellcome to develop a regulatory framework.

Bilal Mateen, chief AI officer at Path, says it is important for countries to develop their own regulation. “‘Does this thing operate well in Zulu?’, which is a question that South Africa cares about, is not one that the FDA [US Food and Drug Administration], I think, has ever considered,” he says.

Christelna Reynecke, chief operations officer at SAHPRA, wants users of an AI algorithm for mental health to have the same assurance as someone taking a medicine that it has been checked and is safe. “It’s not going to start hallucinating, and giving you strange results, and causing more harm than good,” she says.

In the background is the spectre of suicides linked to chatbot use, and cases where AI appears to have fuelled psychosis.

Reynecke wants to develop an advanced monitoring system that can identify “risky” outputs from generative AI tools in real time. “It cannot be something that’s an ‘after the event’, so far after the event that you may have put other patients at risk, because you didn’t intervene fast enough,” she says.

The UK regulator the Medicines and Healthcare Products Regulatory Agency (MHRA) has a similar initiative and is working with tech companies to understand how best to regulate AI in medical devices.

Regulators need to decide what risks are important to monitor, says Mateen. Sometimes, the benefits will outweigh the potential harm to the extent that there is “an impetus for us to get this into people’s hands because it will help them”.

While much of the conversation around AI revolves around chatbots such as Google Gemini and ChatGPT, Mateen suggests “there is so much more that AI and generative AI ... could be used to do”, such as using it to train peer counsellors to provide higher quality care, or finding people the best kind of treatment more quickly.

“A billion people around the world today are experiencing a mental health condition,” he says. “We don’t just have a workforce gap in sub-Saharan Africa; we have a workforce gap everywhere – speak to someone in the UK about how long they have to wait for access to talking therapies.

“The unmet need everywhere could be met more effectively if we had better access to safe and effective technology.”

1 month ago

94

1 month ago

94